In this post, I will cover how to use easyPubMed (R Package) to retrieve data from PubMed. This example is focused on data extraction from PubMed records for a targeting campaign. The post is aimed at suggesting a business-oriented way of making use of data included in PubMed records. This post presents an hypothetical case study that is approached according to the work-flow of a Data Mining problem under the CRISP-DM model and is focused on the business understanding, data understanding and data preparation steps.

In this post, I will cover how to use easyPubMed (R Package) to retrieve data from PubMed. This example is focused on data extraction from PubMed records for a targeting campaign. The post is aimed at suggesting a business-oriented way of making use of data included in PubMed records. This post presents an hypothetical case study that is approached according to the work-flow of a Data Mining problem under the CRISP-DM model and is focused on the business understanding, data understanding and data preparation steps.

Note. For illustrating the potential of easyPubMed as a tool for extraction of valuable data from PubMed records, I also prepared a package named businessPubMed (available on GitHub). If you just want to skip the step-by-step description and get quickly started with this analysis, you can proceed to the section: Step 5, prepare and return.

Note! The easyPubMed library has significantly evolved since this content was first made available. It now incorporates elements that automate some of the operations discussed below. While the code below may NOT be compatible with the latest version of easyPubMed, this post is maintained in its original format for legacy purposes. For more info about the latest version of easyPubMed, please see: https://www.data-pulse.com/dev_site/easypubmed/.

Business Understanding

Our company recently developed some innovative products in a certain market segment (for example, in the field of immunology) and we are now trying to contact potential customers that may be interested in our offer. Since our company is a brand-new start-up, we do not have any list of past or potential customers to contact. Our goal is to obtain one. Indeed, we want to get a list of potential customers from scratch, only using freely available information from selected PubMed records.

Data Understanding

PubMed is a great place where to start from when looking for information about scientific research. PubMed is an online repository of scientific publication records maintained at NCBI/NIH and it can be queried via a Web Interface as well as programmatically, for example via R and the easyPubMed package. PubMed is widely used by the scientific community. Typically, researchers query PubMed almost daily to stay up-to-date with the scientific literature available in their field of study. At the same time, PubMed records carry very valuable information under a business/market perspective. Let’s have a look at a sample PubMed record. We can retrieve it using R and a few lines of code.

library(easyPubMed) first_PM_query <- "T cell receptor" # text of the query first_PM_records <- get_pubmed_ids(first_PM_query) # submit the query to PubMed fetch_pubmed_data(first_PM_records, retmax = 1) # retrieve the first output record

This is an excerpt of the console output from the previous example (NOTE: since the most recent record is returned, it is likely that the result shown here below will differ from the one you will get if you are running the same code again).

<?xml version="1.0" encoding="UTF-8"?>

<PubmedArticleSet>

<PubmedArticle>

<MedlineCitation Status="Publisher" Owner="NLM">

<PMID Version="1">28237836</PMID>

<DateCreated>

<Year>2017</Year>

<Month>02</Month>

<Day>26</Day>

</DateCreated>

<Article PubModel="Print-Electronic">

<Journal>

<ISSN IssnType="Electronic">1525-0024</ISSN>

<Title>Molecular therapy : the journal of the Am. Soc. of Gene Therapy</Title>

<ISOAbbreviation>Mol. Ther.</ISOAbbreviation>

</Journal>

<ArticleTitle>Inhibitory Receptors Induced by VSV Viroimmunotherapy

Are Not Necessarily argets for Improving Treatment Efficacy.</ArticleTitle>

<ELocationID EIdType="doi" ValidYN="Y">10.1016/j.ymthe.2017.01.023</ELocationID>

<Abstract>

<AbstractText>Systemic viroimmunotherapy activates endogenous [...]

</AbstractText>

</Abstract>

<AuthorList CompleteYN="Y">

<Author ValidYN="Y">

<LastName>Sh**</LastName>

<ForeName>Ke*** G</ForeName>

<AffiliationInfo>

<Affiliation>Department of Molecular Med*****, MN 55905, USA;

Medical Scien*****, MN 55905, USA.</Affiliation>

</AffiliationInfo>

</Author>

<Author ValidYN="Y">

<LastName>Za***</LastName>

<ForeName>Sh***</ForeName>

...

Let’s have a closer look at the specific fields included in this output.

- First, this output is XML. We will use the functions from the XML library along with easyPubMed to handle this output

- Fields like <ArticleTitle> or <AbstractText> include info about the general and specific research subject. It is possible to search for keywords in these fields to identify papers published by research groups that may be interested in our products

- Fields like <Author> and <Affiliation> contain actual names and addresses of those scientist that contributed to the research project, i.e., of potential costumers. Interestingly, email addresses may also be included (usually, for corresponding authors only).

- Date fields, such as <DateCreated> can help filtering records based on the year of publication. For example, we may ignore old records (more than 2 years or 5 years) that may not be accurate anymore (change in address, email address or research interests).

Data preparation

This section is the core of this tutorial and relies on some new functions of the easyPubMed package. Briefly, to prepare the data, we need to complete these steps:

- Query PubMed and retrieve all records that may be relevant to our business goal; in particular, use keywords and filters to limit the results of the initial query (for example, search for articles about “T cells” published from laboratories located in the United States during 2012-2016)

- Extract publication data and author information from each PubMed record

- Impute missing data. Affiliations are usually omitted when identical for several authors in the same record. easyPubMed comes with a tool for imputing missing addresses when they are omitted for some of the authors in the record)

- Filter data; for example, remove authors with an address not located in the United States

- Return the processed data in a tabular format for modeling and/or deployment.

These tasks can be easily performed by combining regular expressions and functions from the XML and easyPubMed packages. Alternatively, I packaged all the required code from this tutorial in the businessPubMed package that is available on my gitHub (https://github.com/dami82/businessPubMed).

install.packages(“easyPubMed”)

library(devtools)

install_github(“dami82/businessPubMed”)

?businessPubMed::extract_pubMed_data

Step 1: PubMed Query

We discussed about how to query PubMed from R. We can structure the PubMed query using standard PubMed syntax (more info available here: https://www.ncbi.nlm.nih.gov/books/NBK3827/). We can apply filters concerning date and location. However, note that filtering by Affiliations containing the keywords “USA” or “United States” will include all records having at least one author from the United States. An additional filtering is required (data understanding) and will be performed at step 4. When the number of records exceeds 5000, easyPubMed requires to split the data retrieval task in multiple rounds.

my.query <- "\"t cell\"[TI] AND (USA[Affiliation] OR \"United States\")"

my.query <- paste(my.query, "AND (\"2012\"[EDAT] : \"2016\"[EDAT])")

my.idlist <- get_pubmed_ids(my.query)

my.idlist$Count # returns 5379

#

batch.size <- 1000

my.seq <- seq(1, as.numeric(my.idlist$Count), by = batch.size)

#

# Go ahead with the retrieval

pubmed.data <- lapply(my.seq, (function(ret.start){

batch.xml <- fetch_pubmed_data(my.idlist, retstart = ret.start, retmax = batch.size)

#

# keep processing here! Alternatively, output the XML object.

[...]

}))

The lapply “loop” is incomplete here since the data processing part is missing. We will work on this in the next steps. However, this represents the basic framework to retrieve and process all PubMed records. Now, let’s move on.

Step 2 and 3: Extract record information

In the previous example, each lapply iteration retrieved an XML chunk including several records (stored in the variable batch.xml). To avoid issues, it’s a safe idea to process one record per time. It is possible to extract XML chunks corresponding to a specific field from an XML object via the xpathApply() function (XML package). Briefly, the xpathApply() goes through an XML object, identifies elements by an user-defined XML tag, extracts them and applies a user-defined function element-wise. The simplest option is to employ the saveXML() on each //PubmedArticle element and convert it to String. This task is performed automatically by the easyPubMed::articles_to_list() function. Next, specific fields may be extracted using regular expressions to identify XML tag of interest. easyPubMed is equipped with a dedicated regex function: custom_grep(). To extract a predefined set of fields from a character-class record via regular expressions, we will rely on the article_to_df() function. This function can automatically impute missing affiliation fields based on affiliation info of other authors in the same record and the author order (often, duplicated affiliations are omitted in PubMed records). Overall, this approach is not very efficient (indeed, it implies processing large volumes of text), but it is rather safe and simple. FYI, a more efficient way of extracting information is provided in appendix 1.

# Retrieve a sample "batch.xml"... batch.xml <- fetch_pubmed_data(my.idlist, retstart = 1, retmax = batch.size) # record.list <- easyPubMed::articles_to_list(batch.xml) tmp.record <- article_to_df(pubmedArticle = record.list[[1]], autofill = TRUE, # impute NA affiliations max_chars = 0) # do not retrieve abstract class(tmp.record) # data.frame colnames(tmp.record) # [1] "pmid" "doi" "title" "abstract" "year" "month" "day" "jabbrv" # [9] "journal" "lastname" "firstname" "address" "email"

We can loop through the whole list of PubMed records (record.list) and extract the fields of interest as shown above. While the article_to_df() function can successfully process most records, ~ 0.5% of PubMed records can raise errors because of missing XML fields in the record. Next version of easyPubMed will implement a fix to this problem. In the meanwhile, the recommended approach is to wrap the expression in a tryCatch() block and to verify the fields in the returned data frame.

xtracted.data <- lapply(1:length(record.list), (function(i){

#

# monitor progress

if (length(record.list) > 60) {

custom.seq <- as.integer(seq(1, length(record.list), length.out = 50))

if (i %in% custom.seq) { message(".", appendLF = FALSE)}

} else {

message(".", appendLF = FALSE)

}

#

# extract info

tmp.record <- tryCatch(easyPubMed::article_to_df(pubmedArticle = record.list[[i]],

autofill = TRUE,

max_chars = 10),

error = function(e) { NULL } )

#

# return data that matter

if (!is.null(tmp.record)) {

required.cols <- c("title", "year", "journal", "lastname", "firstname", "address", "email")

out.record <- data.frame(matrix(NA, nrow = nrow(tmp.record), ncol = length(required.cols)))

colnames(out.record) <- required.cols

match.cols <- colnames(tmp.record)[colnames(tmp.record) %in% required.cols]

out.record[,match.cols] <- tmp.record[,match.cols]

} else {

out.record <- NULL

}

out.record

}))

xtracted.data <-do.call(rbind, xtracted.data)

xtracted.data[100:110,c(4,5,7)] # final data frame, excerpt

Step 4: data filtering

Here the aim is to remove unwanted data from our data frame. More specifically, in this example we want to remove all data corresponding to affiliations not located in the United States. We can achieve this goal via different approaches. One possibility is: 1) define a filter, i.e. a list of countries that we want to remove; 2) use regular expressions to identify countries in the affiliations and remove unwanted rows; 3) return the filtered data. A list of “Countries of the world” can be easily scraped from the Web. Just make sure to remove “United States” from the filter. For example.

# Scrape a list of countries from the Web

tmp <- httr::GET("https://www.countries-ofthe-world.com/all-countries.html")

tmp =toString(httr::content(tmp))

pos.x = regexpr("<ul[[:space:]]class=\"column\">.*</ul>", tmp)

tmp = substr(tmp, pos.x, pos.x - 1 + attributes(pos.x)$match.length)

countries <- custom_grep(tmp, "li", format = "char")

countries <- gsub("(\\(.*\\))|(<)(.*)(>)", "", countries)

countries <- gsub("((^[[:space:]]*)|([[:space:]]*$))", "", countries)

countries <- countries[nchar(countries) > 2]

countries <- countries[!countries %in% c("United States of America", "Countries of the World")]

head(countries)

#

# Convert the Country list to a regex filter

countries.filter <- gsub("[[:punct:]]", "[[:punct:]]", toupper(countries))

countries.filter <- gsub(" ", "[[:space:]]", countries.filter)

countries.filter <- paste("(",countries.filter,")", sep = "", collapse = "|")

countries.filter

Now, let’s remove all rows with missing values in the address field or with affiliations matching our filter. I’ll keep the code to a minimum here. Of course, filtering will require much more effort in real-world scenarios. However, these few lines nicely illustrate the goal of this Data preparation step.

xtracted.data <- xtracted.data[!is.na(xtracted.data$address),] xtracted.data <- xtracted.data[regexpr(countries.filter, toupper(xtracted.data$address)) < 0,] head(xtracted.data)

Step 5: prepare and return

We covered all steps to process one batch of PubMed records. All these lines of code are supposed to be included in the lapply loop shown in the section “Step1: PubMed Query”. At each iteration, this lapply loop will output a curated data frame that we can later merge using do.call(). The final code has been included in the businessPubMed package that is available on my GitHub (dami82/businessPubMed). You can install using devtools (as shown at the beginning of the post). The examples discussed below make use of the function from businessPubMed. This completes the Data Preparation section. Let’s now go ahead and play with businessPubMed and have a look at the results.

Debriefing

Let’s proceed with a test run of our code. If relying on businessPubMed, it is possible to run the analysis with a limited number of lines of code.

# install the package via > devtools::install_github("dami82/businessPubMed")

library(businessPubMed)

#

# define a research subject

res.subject <- "t cell"

#

# define a PubMed Query string

my.query <- paste("\"", res.subject, "\"[TI]", sep = "")

my.query <- paste(my.query, "AND (USA[Affiliation] OR \"United States\")" , sep = "")

my.query <- paste(my.query, "AND (\"2012\"[EDAT] : \"2016\"[EDAT])")

#

# define the regex filter

data("countries")

countries <- countries[countries != "United States of America"]

countries.filter <- gsub("[[:punct:]]", "[[:punct:]]", toupper(countries))

countries.filter <- gsub(" ", "[[:space:]]", countries.filter)

countries.filter <- paste("(",countries.filter,")", sep = "", collapse = "|")

countries.filter <- paste("(UK)|", countries.filter, sep = "")

countries.filter

#

# extract data from PubMed

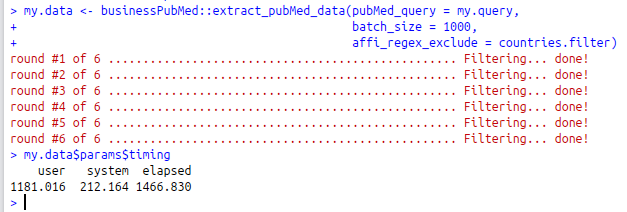

my.data <- businessPubMed::extract_pubMed_data(pubMed_query = my.query,

batch_size = 1000,

affi_regex_exclude = countries.filter)

The output (my.data) is a list including two elements. The first element ($params) is a list including the parameters used for the analysis (query string, batch number, time required to complete the analysis). The second element ($data) includes the results formatted as data.frame. On a system equipped with an i7, 16 Gb RAM, Ubuntu 16.04 LTS and a fast Ethernet connection, the previous job (5377 PubMed records retrieved, 29880 extracted rows) was successfully completed in about 25 min (elapsed time is expressed in seconds) without using parallelization.

This returned 8000+ unique addresses and 1500+ unique email addresses.

This returned 8000+ unique addresses and 1500+ unique email addresses.

sum(!is.na(unique(my.data$data$address))) [1] 8488 sum(!is.na(my.data$data$email)) [1] 1951 sum(!is.na(unique(my.data$data$email))) [1] 1563

Each address is linked to names and information about the corresponding scientific publications. Therefore, it is possible to prepare tailored customized messages. For example.

tmp.addressee <- my.data$data[1,]

mail.text <- paste("\n", tmp.addressee$address, "\n\n", "Dear Dr. ", tmp.addressee$lastname, " ",

tmp.addressee$firstname, ", \n\n I really appreciated reading your ",

tmp.addressee$year ," manuscript that was published on ",

tmp.addressee$journal, ". I believe that your work on ",

gsub("[[:punct:]]$", "", tmp.addressee$title),

" may really benefit from the products included in our offer. ",

"For example, we sell a product specifically designed for research on ",

res.subject, " and I am happy to include a special offer that you may find ",

"very interesting. \n[...] Feel free to contact me at the following ",

"address XXXXX... \n \nRegards. \n \nJohn Doe \nCompany XYZ", sep = "")

#

cat(mail.text)

Will result in.

Department of Internal Medicine, Division of Hematology and Oncology, University of Mxxxxxx, Yyy Yyyyyy, MI USA

Dear Dr. Phxxxxx Tyxxx,

I really appreciated reading your 2016 manuscript that was published on Journal for immunothxxxx of xxxx. I believe that your work on Challenges and opportunities for checkpoint blockade in T-cell lymphoproliferative disorders may really benefit from the products included in our offer. For example, we sell a product specifically designed for research on t cell and I am happy to include a special offer that you may find very interesting.

[…] Feel free to contact me at the following address XXXXX…

Regards.

John Doe

Company XYZ

You can automatically compile mails or emails like in the example above for all the elements (rows) in the data.frame extracted using easyPubMed and businessPubMed. Alternatively, you can focus only on those entries having a valid email address. Such strategy may be employed to promote a new service from your company or maybe -who knows- to enquire about post-doctoral openings in laboratories working on a specific subject and publishing on high-impact journals (of course, you can set filter for the Journal Name as well as any other field… There are a lot of opportunities).

Conclusions

I illustrated how to extract information from PubMed via the easyPubMed package. I used XML and regular expression for retrieving data of interest that may be suitable for starting a targeting campaign. The steps covered in this tutorial may be easily implemented as part of the Data Preparation step in the context of a Data Mining workflow aimed at predicting -for example- response of research groups to certain offers based on recently published scientific papers. There are endless opportunities to implement and improve my code and my approach. If you are interested in this, please, feel free to leave a comment or send me a message. I am always open to new collaborations or joining a project.

Thanks.

Posted by Damiano Fantini, Ph.D.

easyPubMed and businessPubMed are available free-of-charge under the GPL-2 license and are provided as is without warranty of any kind. Using these softwares implies acceptance of NIH policies on PubMed data access and use.

Appendix 1

Alternatively, it is possible to retrieve values from any record-unique field, such as <ArticleTitle>, <Journal> or <AuthorList>. The strategy here is to directly extract all record titles, journal info and authorLists at once (pros: faster than converting each PubmedArticle to String; cons: one missing or extra tag will raise an issue). Next, we will loop through the list(s) and prep the data to return.

batch.xml <- fetch_pubmed_data(my.idlist, retmax = 1000) # sample XML batch # batch.xml.list <- XML::xpathApply(batch.xml, "//PubmedArticle") # option 1, simple class(batch.xml.list) # list class(batch.xml.list[[1]]) # character # all_titles <- XML::xpathApply(batch.xml, "//ArticleTitle", saveXML) # list, length = 1000 all_journals <- XML::xpathApply(batch.xml, "//Journal", saveXML) # list, length = 1000 all_authors <- XML::xpathApply(batch.xml, "//AuthorList", saveXML) # list, length = 1000

The following steps will be executed in a record-wise manner, will rely on the trim_address() and the custom_grep() functions from easyPubMed and will focus on:

- further extract publication- and author- specific sub-fields: name, address, email?

- remove tags and clean values

- put everything together and return a data frame

The next code may look a little complex, but at a second look it will result pretty easy to read. Indeed, it only includes operations that are very similar to what discussed above. However, if you have questions, don’t hesitate to send me a comment or an email.

if (length(all_titles) == length(all_journals) &

length(all_journals) == length(all_authors))

{

data.out <- lapply(1:length(all_titles), (function(i){

#

if (length(all_titles)>100){

if (i %in% as.integer(seq(1, length(all_titles), length.out = 50 )))

message(".", appendLF = FALSE)

}

# Record specific info

tmp.title <- custom_grep(all_titles[[i]], "ArticleTitle", format = "char")

if (is.null(tmp.title)) { tmp.title <- NA }

tmp.year <- custom_grep(all_journals[[i]], "Year", format = "char")

if (is.null(tmp.year)) { tmp.year <- NA }

tmp.jname <- custom_grep(all_journals[[i]], "Title", format = "char")

if (is.null(tmp.jname)) { tmp.jname <- NA }

# Author specific stuff, requires an extra loop

tmp.authlist <- custom_grep(all_authors[[i]], "Author", format = "list")

if ((!is.null(tmp.authlist)) & length(tmp.authlist) > 0) {

out <- lapply(tmp.authlist, (function(auth){

tmp.last <- custom_grep(auth, "LastName", format = "char")

tmp.last <- ifelse(length(tmp.last) > 0, tmp.last, NA)

#

tmp.first <- custom_grep(auth, "ForeName", format = "char")

tmp.first <- ifelse(length(tmp.first) > 0, tmp.first, NA)

#

tmp.affi <- custom_grep(auth, "Affiliation", format = "char")

if (!is.null(tmp.affi)) {

tmp.affi <- trim_address(tmp.affi[1])

} else {

tmp.affi <- NA

}

tmp.email <- regexpr("([[:alnum:]]|\\.|\\-\\_){3,200}@([[:alnum:]]|\\.|\\-\\_){3,200}(\\.)([[:alnum:]]){2,6}",

auth)

if (tmp.email > 0) {

tmp.email <- substr(auth, tmp.email[1], tmp.email[1] -1 +

attributes(tmp.email)$match.length[1])

} else {

tmp.email <- NA

}

c(title = tmp.title[1],

year = tmp.year[1],

journal = tmp.jname[1],

firstName = tmp.first[1],

lastName = tmp.last[1],

address = tmp.affi[1],

email = tmp.email[1])

}))

out <- suppressWarnings(data.frame(do.call(rbind, out), stringsAsFactors = FALSE))

#

# impute addresses

out <- out[!is.na(out$lastName),]

ADDKEEP <- !is.na(out$address)

if(sum(ADDKEEP) > 0 ) {

ADDKEEP <- which(ADDKEEP)

RESIDX <- rep(ADDKEEP[1], nrow(out))

for (zi in 1:nrow(out)) {

if(zi %in% ADDKEEP)

RESIDX[zi:nrow(out)] <- zi

}

out$address <- out$address[RESIDX]

}

out

#

} else {

NULL

}

}))

do.call(rbind, data.out)

}

The code shown above has been included in the extract_pubMed_fast() function of the businessPubMed package. The arguments of an extract_pubMed_fast() call are identical to those of an extract_pubMed_data() call and the results returned by the two functions were exactly the same for the sample query. The only difference, as expected, is the elapsed time, with the “fast” algorithm about 10% faster than the standard counterpart.

Damiano, I am trying to get ALL of the authors affiliations for over 2 million publications using PMIDs from csv files downloaded from NIH ExPorter. THANK YOU! Your example above is helpful and I am working on modifications for just using PMID. There may have been updates to the package b/c in using your code above I have found that I needed to use “get_Pubmed_Ids” “getPubmedIds” (line 5) and “fetch_pubmed_data” instead of “fetchpubmeddata” (line 15), and all seems to work fine except for some truncation of the addresses, still working on to that..and the affiliation data..

You’re very right! I should have updated this post earlier… I’ll take care of this asap. Also, funny thing, I am working on an update of the package that will include some functions for retrieving author information out of the box. You may be really interested.. Please, stay tuned…

Hi Damiano,

This was very useful. Thanks a lot. I could not download the businesspubmed package from Rstudio. Could you kindly let me know how to download.

Secondly, could we extract author address details for specific pubmed IDs – if so please share the code.

thanks

Hello Venkat,

most of the tools that were initially devoloped for ‘businesspubmed’ have been included in easyPubMed. Also, businesspubmed was built on the top of an old version of easyPubMed… Things changed quite a bit ever since. I would recommend you to have a look at the most recent vignette of easyPubMed. Feel free to email me with more specific questions. Thank you.

Hi Damiano,

Thank you for this blog. It’s really helpful.

I have one doubt. I am running the same code (using EasyPubMed package), but the record list sometimes stores 1000, sometimes 2000, 5000. I am not sure why would this be? I am keeping the batch.size same in all the cases which is greater than 5000 in my case. Shouldn’t it always then take 5000 records?

Thanks for all the help! 🙂

Hello,

the functions included in the easyPubMed package can download/retrieve a number of records up to “batch.size” (typically, up to 5000), but it really depends on the query you performed (does your query return 5000 results?). I disabled the possibility of downloading too many (more than 5000) records at once, as this might reduce efficiency and increase the chances of server timeouts. Anyways, you can always fine tune your analyses by setting the retmax and retstart arguments. Feel free to email me a reproducible example, if needed. I guess I could help you with the code, if needed.

Thanks for using easyPubMed.